Cute cuddly robot!

In the past few months, I have been playing a bit with a great robotic platform available at the university in the Introduction to mobile robotics and Eurobot subjects.

In the past few months, I have been playing a bit with a great robotic platform available at the university in the Introduction to mobile robotics and Eurobot subjects.

We were provided a pre-made robot chassis with some basic electronics, an ATMega128 board, hefty battery, motors and Sabretooth motor drivers. With AxTheB, we have built a merkur-based construction on top of it to hold a camera-on-stick module that gives a picture of surroundings of the robot, and a 12″ notebook that is hooked up to the webcam and the ATMega.

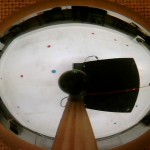

The most interesting thing is the camera. It is held up on a wooden stick, facing upwards to a parabolic mirror (i.e. a laddle), giving it picture of its surroundings in about 320\deg angle (part of the view is obstructed by the stick). That’s not my original idea but it was originally suggested and built for brmbot outdoor. We had it for Robotour 2010 competition, and during the competition I have even built some basic image recognition for it, but in the end we did not have time to integrate it to the main control software so the camera served only as a holder for GPS+compass back then.

More about the tasks below. In the end it turned out that I really don’t have enough time to do this so things got quite stressful at one point, but I would feel really bad if I gave up. In the end, I managed to get things working. And as any robot builder will tell you, seeing your tiny friend roam around happily, doing whatever it’s supposed to do, is worth any stress! :-)

The source code is rather horrible. Keep in mind that it was hacked incrementally and never really cleaned up.

Brmpuk

The first task (Puck Collect from Robot Challenge 2011 in Vienna): Your tiny robot is in a ~2x2m white playground, with two corner squares painted red and blue, and with tiny red and blue pucks scattered over the playground. Its robotic opponent also sits in the playground. Each player has a color assigned, and its goal is to accumulate as many pucks of its color in its corner as possible, while avoiding putting pucks of the other color in that corner. And a deadline of two minutes.

The first task (Puck Collect from Robot Challenge 2011 in Vienna): Your tiny robot is in a ~2x2m white playground, with two corner squares painted red and blue, and with tiny red and blue pucks scattered over the playground. Its robotic opponent also sits in the playground. Each player has a color assigned, and its goal is to accumulate as many pucks of its color in its corner as possible, while avoiding putting pucks of the other color in that corner. And a deadline of two minutes.

(In addition, the robot has got also a sort of plough in the front

where it collects and pushes the pucks as few centimeters directly

in front of the robot are invisible for the camera.)

Unfortunately, I was not actually able to spend a lot of time on the project (and attend the awesome lectures) due to sickness (to play with robots, you have to come to the lab) and time scheduling problems. Nevertheless, I have been able to finish at least a basic version of the robot and it kind of did what it was supposed to do. (Though for a real competition, more work would be needed – the construction was very frail and the bot could not properly unstuck itself when it got misled e.g. by colors seen outside of the playground.)

Unfortunately, I was not actually able to spend a lot of time on the project (and attend the awesome lectures) due to sickness (to play with robots, you have to come to the lab) and time scheduling problems. Nevertheless, I have been able to finish at least a basic version of the robot and it kind of did what it was supposed to do. (Though for a real competition, more work would be needed – the construction was very frail and the bot could not properly unstuck itself when it got misled e.g. by colors seen outside of the playground.)

Source code: brmpuk.git

Blackline

The second task was a lot simpler – just follow a thick black line laid on the floor. With the communications, firmware and video processing infrastructure already debugged, it was just a matter of replacing the image recognition and I managed to get everything done and debugged in just a couple of hours.

The second task was a lot simpler – just follow a thick black line laid on the floor. With the communications, firmware and video processing infrastructure already debugged, it was just a matter of replacing the image recognition and I managed to get everything done and debugged in just a couple of hours.

To briefly describe the workings of the robot: The camera provides a picture of the neighborhood of the robot in the YUYV format. The software periodically grabs frames from the camera and looks at three rectangles covering the area slightly in front of the robot – straight ahead, to the right and to the left. (There is a slight gap to give the robot a chance to react with sufficient head-start, deal well with sharp turns and skip over gaps.)

The contents of the three rectangles is then analyzed; YUYV is a convenient pixel format for image recognition since you already have brightness (luma) and hue (chrominance) separated. To detect dark line on light background, it is enough to look at the Y-values of pixels. Second, we are detecting a high-contrast object that is always smaller than the rectangle we look at. So we do a trivial thing – get luma difference of the darkest and brightest pixel. We do not get dark pixels from dirt since the camera image is sufficiently blury, and the only large enough dark object on white background is the line, so this works perfectly, can adjust to overall brightness of the image (to a degree), and is really simple and foolproof.

The robot driving strategy is then trivial. After each frame, the control program will give an update on new speed of both wheels. If it detects the line is being followed, it goes straight ahead. Otherwise, if one of the side rectangles is active, it stops one motor and starts turning in that direction. If no rectangle is active, it may be that a sharp turn has been encountered: at one point both the ahead rectangle and a side rectangle was active, at the next moment none was. Therefore, it looks at the last time one of the side rectangles has been active and goes in that direction. A similar handling is used for both side rectangles active (during turning to one side, the other rectangle may blink to activity e.g. due to table edge).

Source code: brmpuk.git blackline

P.S.: I just wasted three hours trying to work around bugs in WordPress, jQuery(?) and YouTube/Chromium so that I could publish this post. How are you people managing to live in this Web 2.0 world?!

Recent Comments