So I didn’t get around to seriously (besides running a few examples) play with Keras (a powerful library for building fully-differentiable machine learning models aka neural networks) – until now. And I have been a bit surprised about how tricky it actually was for me to get a simple task running, despite (or maybe because of) all the docs available already.

The thing is, many of the “basic examples” gloss over exactly how the inputs and mainly outputs look like, and that’s important. Especially since for me, the archetypal simplest machine learning problem consists of binary classification, but in Keras the canonical task is categorical classification. Only after fumbling around for a few hours, I have realized this fundamental rift.

The examples (besides LSTM sequence classification) silently assume that you want to classify to categories (e.g. to predict words etc.), not do a binary 1/0 classification. The consequences are that if you naively copy the example MLP at first, before learning to think about it, your model will never learn anything and to add insult to injury, always show the accuracy as 1.0.

So, there are a few important things you need to do to perform binary classification:

- Pass

output_dim=1 to your final Dense layer (this is the obvious one).

- Use

sigmoid activation instead of softmax – obviously, softmax on single output will always normalize whatever comes in to 1.0.

- Pass

class_mode='binary' to model.compile() (this fixes the accuracy display, possibly more; you want to pass show_accuracy=True to model.fit()).

Other lessons learned:

- For some projects, my approach of first cobbling up an example from existing code and then thinking harder about it works great; for others, not so much…

- In IPython, do not forget to reinitialize

model = Sequential() in some of your cells – a lot of confusion ensues otherwise.

- Keras is pretty awesome and powerful. Conceptually, I think I like NNBlocks‘ usage philosophy more (regarding how you build the model), but sadly that library is still very early in its inception (I have created a bunch of gh issues).

(Edit: After a few hours, I toned down this post a bit. It wasn’t meant at all to be an attack at Keras, though it might be perceived by someone as such. Just as a word of caution to fellow Keras newbies. And it shouldn’t take much to improve the Keras docs.)

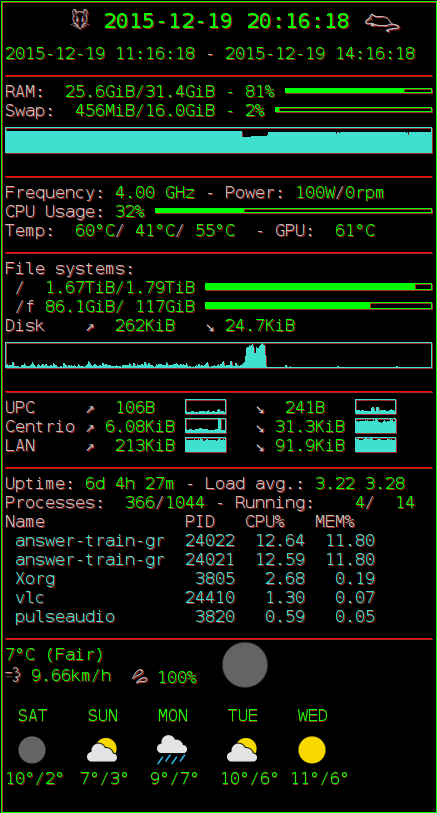

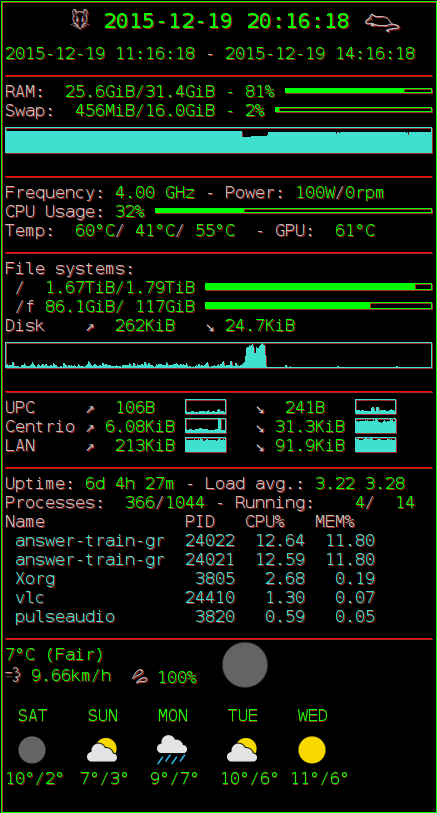

A couple of weeks ago, I have created my own fairly elaborate setup of the Conky system monitor. I have been wanting to fix up some of the weather display aspects, but I’m realistically not getting around to that anytime soon.

So, I have pushed it out to Github now.

I’m still working on YodaQA and there is quite some interest in it in my mailbox. One thing leads to another and our startup Ailao already has a few first customers, we work together on various related semantic NLP / search projects.

In YodaQA, we have a much neater web interface as well as a mobile app as the natural way to interact with a QA system is using your voice. Plus, on a limited domain (movies), we are getting pretty close to crossing the 80% mark for accuracy on simpler questions, entering the “magic zone” where people might start really trusting the system. A few essential blocks for that are still in the pipeline, though.

I’ll try to post a bit more about YodaQA and other work we are doing in the coming weeks / months (as well as some of my hobby projects, of course).

For a course of Jan Šedivý, I prepared a presentation on building apps around the semantic web and linked data. See it here for an intro to the tech, it also includes two silly web mashups that might be inspiring.

I was working on Question Answering last year. Guess what, I’m still on it!

I threw away my first prototype BlanQA and started building a second system, YodaQA. It currently has reasonable performance of answering about a third of trivia questions properly and listing the correct answer in top five candidates for half of the questions – without doing any googling or binging.

A few weeks ago, I published the first paper on YodaQA. With a few fellow scientists, we also re-started the qa-oss Google Group on open source question answering systems.

Today, I finally made a proper homepage for YodaQA and launched a live demo of the system. It’s pretty primitive, but hopefully will serve as a proof of concept.

So what’s the strongest program you can make with minimum effort and code size while keeping maximum clarity? Chess programers were exploring this for long time, e.g. with Sunfish, and that inspired me to try out something similar in Go over a few evening recently:

https://github.com/pasky/michi

Unfortunately, Chess rules are perhaps more complicated for humans, but much easier to play for computers! So the code is longer and more complicated than Sunfish, but hopefully it is still possible to understand it for a Computer Go newbie over a few hours. I will welcome any feedback and/or pull requests.

Contrary to other minimalistic UCT Go players, I wanted to create a program that actually plays reasonably. It can beat many beginners and on 15×15 fares about even with GNUGo; even on 19×19, it can win about 20% of its games with GNUGo on a beefier machine. Based on my observations, the limiting factor is time – Python is sloooow and a faster language with the exact same algorithm should be able to speed this up at least 5x, which should mean at least two ranks level-up. I attempt to leave the code also as my legacy, not sure if I’ll ever get back to Pachi – these parts of a Computer Go program I consider most essential. The biggest code omission wrt. strength is probably lack of 2-liberty semeai reading and more sophisticated self-atari detection.

P.S.: 6k KGS estimate has been based on playtesting against GNUGo over 40-60 games – winrate is about 50% with 4000 playouts/move. Best I can do… But you can connect the program itself to KGS too:

http://www.gokgs.com/gameArchives.jsp?user=michibot