Wow, what a jargon-filled post title. Basically, we do a lot of our deep learning currently on the AWS EC2 cloud – but to use the GPU there with all the goodies (up to CuDNN that supports modern Theano’s batch normalization) is a surprisingly arduous process which you basically need to do manually, with a lot of trial and error and googling and hacking. This is awful, mind-boggling and I hate that everyone has to go through this. So, to fix this bad situation, I just released a community AMI that:

- …is based on Ubuntu 16.04 LTS (as opposed to 14.04)

- …comes with CUDA + CuDNN drivers and toolkit already set up to work on g2.2xlarge instances

- …has Theano and Keras preinstalled and preconfigured so that you can run the Keras ResNet model on a GPU right away (or anything else you desire)

To get started, just spin up a GPU (g2.2xlarge) instance from community AMI ami-f0bde196 (1604-cuda80-cudnn5110-theano-keras), ssh in as the ubuntu@ user and get going! No hassles. But of course, EC2 charges apply.

Edit (errata): Actually, there’s a bug – sorry about that! Out of the box, the nvidia kernel driver is not loaded properly on boot. I might update the AMI later, for now to fix it manually:

- Edit

/etc/modprobe.d/blacklist.conf (using for example sudo nano) and append the line blacklist nouveau to the end of that file

- Run

sudo update-initramfs -u

- Reboot. Now, everything should finally work.

This AMI was created like this:

- The stock Ubuntu 16.04 LTS AMI

- NVIDIA driver 367.57 (older drivers do not support CUDA 8.0, while this is the last driver version to support the K520 GRID GPU used in AWS)

- To make the driver setup go through, the trick to install

apt-get install linux-image-extra-`uname -r` per

- CUDA 8.0 and CuDNN 8.0 set up from the official though unannounced NVIDIA Debian packages by replaying the nvidia-docker recipes

- bashrc modified to include cuda in the path

- Theano and Keras from latest Git as of writing this blogpost (feel free to git pull and reinstall), and some auxiliary python-related etc. packages

- Theano configured to use GPU and Keras configured to use Theano (and the “th” image dim ordering rather than “tf” – this is currently non-default in Keras!)

- Example Keras deep learning models, even an elephant.jpg! Just run

python resnet50.py

- Exercise: Install TensorFlow on the system as well, release your own AMI and post its id in the comments!

- Tip: Use nvidia-docker based containers to package your deep learning software; combine it with docker-machine to easily provision GPU instances in AWS and execute your models as needed. Using this for development is a hassle, though.

Enjoy!

We have our mice TV now streaming our colony of mus minutoides at the canonical URL http://mice.or.cz/ but it would be nice if you could watch them in your web browser (without flash) instead of having to open a media player for the purpose.

I gave that some serious prodding. We still use vlc with the same config as in the original post (mp4v codec + mpegts container). Our video source is an IP cam producing mp4v via rtsp and an important constraint is CPU usage as it runs on my many-purpose server (current setup consumes 10% of one CPU core). We’d like things to work in Debian’s chromium and iceweasel, primarily.

It seems that in the HTML5 world, you have these basic options:

- MP4/H264 in MP4 – this *does not work* with live streaming because you need to make sure the browser receives a correct header with metadata which normally occurs only at the top of the file; it might work with some horrible custom code hacks but nothing off-the-shelf

- VP80/VP90 in webm – this works, but encoding consumes between 150%-250% CPU! even with low bitrates; this may be okay for dedicated streaming servers but completely out of the question for me

- Theora in Ogg – this almost works, but the stream stutters every few seconds (or slips into endless buffering), making it pretty hard to watch; apparently some keyframes are lost and Theora homepage gives a caveat that Ogg encoding is broken in VLC; the CPU usage is about 30%, which would have been acceptable

That’s it for the stock video tag formats, apparently. There are two more alternatives:

- HTTP Live Stream (HLS) has no native support in browsers outside of mobile, might work with a hack like https://github.com/RReverser/mpegts but you may as well use MSE then

- Media Source Extensions (MSE) seem to allow basically implementing decoding custom containers (in javascript) for any codecs, which sounds hopeful if we’d just like to pass mp4v (or h264) through. The most popular such container is DASH, which seems to be all about fragmenting video to smaller HTTP requests with per-fragment bitrate negotiation, but still completely codec agnostic. Re Firefox, needs almost latest version. Media players support DASH too.

So far, the best looking courses seem to be:

- Media server nginx-rtmp-module (in my case with pull directive towards the ipcam’s rtsp) with mpeg-dash output and dash.js based webpage. I might have misunderstood something but it might actually just work (assuming that the bitrate negotiation could always end up just choosing the ipcam’s fixed bitrate; something very low is completely sufficient anyway).

- Debug libogg + libtheora to find out why it produces corrupted streams – have fun!

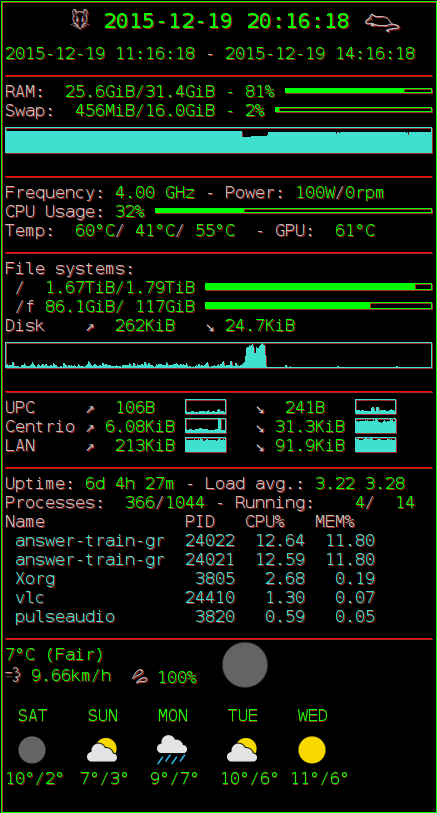

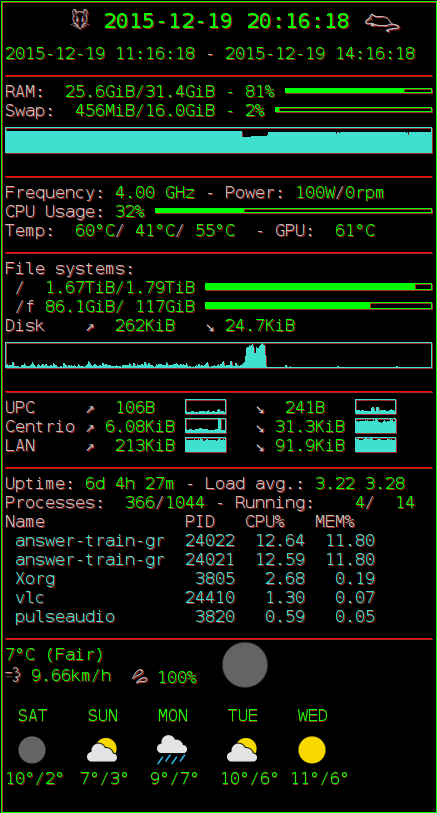

A couple of weeks ago, I have created my own fairly elaborate setup of the Conky system monitor. I have been wanting to fix up some of the weather display aspects, but I’m realistically not getting around to that anytime soon.

So, I have pushed it out to Github now.